Blogs

Why should healthcare businesses adopt cloud computing?

Cloud computing has long been a foundational technology across industries. Today, its importance in healthcare, particularly for small and medium-sized businesses (SMBs), is more evident than ever.

The healthcare cloud infrastructure market is expected to expand at a Compound Annual Growth Rate (CAGR) of 16.7%, reaching an estimated USD $193.4 billion by 2030. Cloud computing is no longer just an option but a necessity for healthcare industries. For healthcare SMBs, this growth signals an opportunity to streamline operations, improve outcomes, and stay competitive in a rapidly evolving market.

What is cloud computing for healthcare?

Cloud computing in healthcare refers to using remote servers hosted on the internet to store, manage, and process healthcare data rather than relying on local servers or personal computers. This technology enables healthcare providers to retrieve patient records, exchange medical information, and collaborate effortlessly, regardless of location.

The primary benefit of cloud computing in healthcare is its robust security. Given the sensitive nature of healthcare data, cloud services incorporate advanced security features like encryption, multi-factor authentication, and regular backups. Additionally, cloud solutions are designed to comply with healthcare regulations such as HIPAA, providing healthcare providers with an extra layer of confidence.

How is cloud computing transforming healthcare?

Cloud computing is reshaping healthcare by making data more accessible, operations more efficient, and patient care more personalized. With AWS-powered solutions, healthcare providers, especially SMBs, can harness advanced analytics, scale securely, and reduce costs while staying compliant and future-ready.

1. Advanced analytics and AI integration

Cloud platforms, particularly those using AWS services, provide healthcare businesses with powerful analytics tools and AI algorithms that process large volumes of data in real-time. Some benefits include:

- Improved diagnostics: AWS's AI tools, like Amazon Bedrock, help healthcare providers diagnose more accurately by analyzing complex datasets.

- Optimized treatment: Amazon SageMaker enables personalized treatment plans based on predictive models.

- Data-driven decisions: Real-time analytics help providers optimize resource allocation and decision-making.

2. Enhanced data accessibility and interoperability

AWS cloud services ensure healthcare data is accessible from anywhere, eliminating the reliance on physical records and improving access to patient information.

- Seamless data sharing: AWS HealthLake enables efficient data exchange across healthcare systems.

- Improved collaboration: Facilitates real-time access to patient records, improving care coordination.

- Critical data access: Enables immediate access to patient data during emergencies, enhancing timely decision-making.

3. Cost efficiency with cloud computing in healthcare

Cloud computing allows healthcare providers to significantly reduce the costs of maintaining on-site IT infrastructure.

- Elimination of hardware costs: No need for expensive physical servers and equipment.

- Pay-as-You-Go model: Healthcare businesses only pay for the resources they use, avoiding unnecessary expenses.

- Reallocation of savings: Cost savings can be reinvested into improving patient care and expanding services.

4. Data protection with cloud computing

Security is a top concern in healthcare, and AWS cloud services offer robust data protection features to safeguard sensitive patient information.

- Data encryption: Ensures patient data is securely encrypted both in transit and at rest.

- Multi-factor authentication: Adds an extra layer of security to prevent unauthorized access.

- Compliance with regulations: AWS ensures compliance with HIPAA and other healthcare standards, simplifying regulatory adherence.

5. Scalability and flexibility

AWS cloud services offer unparalleled flexibility, allowing healthcare providers to scale resources according to their needs.

- Instant scaling: Easily adjust computing power during peak periods or patient volume changes.

- Elastic storage: Increase patient data storage capacity without needing on-site infrastructure.

- Agility: Healthcare organizations can respond quickly to market or operational changes, ensuring long-term growth and competitiveness.

Cloudtech provides customized AWS cloud solutions that help healthcare SMBs to scale securely, reduce costs, and enhance patient care. Check out the services here!

How is cloud computing used in healthcare?

Cloud computing plays a crucial role across key areas of healthcare, supporting faster, smarter, and more connected care delivery in everything from telemedicine to real-time data sharing and medical imaging.

- Telemedicine: Cloud-based platforms allow healthcare providers to conduct virtual consultations, enhancing access to care, particularly in rural or underserved areas. Providers can securely share patient data during remote visits, offering a seamless experience for both doctors and patients.

- Electronic health records (EHR): Cloud-based EHR systems enable healthcare professionals to securely store, manage, and access patient records in real time, improving care coordination and reducing errors associated with paper-based systems.

- Clinical collaboration and data sharing: Cloud platforms facilitate collaboration between healthcare providers by enabling real-time sharing of patient data across different locations and facilities, improving communication and ensuring continuity of care.

- Remote monitoring: Cloud platforms support the integration of wearable devices and remote monitoring tools, allowing healthcare providers to track patient health metrics in real time and intervene if necessary.

- Medical imaging: Cloud computing enables healthcare organizations to store and access large medical image files (such as X-rays and MRIs) securely, facilitating faster diagnoses and easy sharing among specialists for consultation.

- Supply chain management: Cloud computing enhances inventory management by providing real-time data on medical supplies, ensuring timely restocking, and reducing the risk of shortages.

If you’re an SMB in healthcare, whether offering telemedicine, managing EHRs, or handling medical imaging, cloud computing can transform how you operate and care for patients. Partner with Cloudtech to implement secure, scalable AWS solutions tailored to your needs and start unlocking the full potential of cloud-powered healthcare.

Conclusion

Cloud computing has become an important tool in healthcare, offering a range of benefits such as operational efficiency, improved patient care, and cost savings. By enhancing data accessibility, enabling real-time collaboration, and supporting advanced analytics, cloud technology is transforming healthcare delivery across the globe. Healthcare providers who adopt cloud solutions are better positioned to streamline operations, ensure compliance, and improve patient outcomes.

Cloudtech’s services, including Data Modernization, Infrastructure and Resilience, are designed to support your healthcare organization's evolving needs. Optimize your operations and ensure your data and infrastructure are secure and scalable for the future. Reach out to Cloudtech!

FAQs

1. How does cloud computing improve patient care?

A: Cloud computing enhances patient care by providing healthcare professionals with immediate access to accurate and up-to-date patient data, regardless of location. It also supports telemedicine, remote monitoring, and personalized treatment plans, improving patient outcomes and reducing care delays.

2. Can cloud computing help healthcare organizations stay compliant with regulations?

A: Yes, cloud computing helps healthcare organizations comply with regulations like HIPAA by providing secure data storage and enabling real-time monitoring of patient information access. Cloud solutions are continuously updated to comply with industry regulations and security standards.

3. How can cloud computing help my healthcare organization save on operational costs?

A: Cloud computing can significantly reduce the need for expensive on-site infrastructure, such as physical servers and storage systems. By shifting to the cloud, your healthcare organization can cut hardware costs, maintenance expenses, and IT staff overhead while benefiting from scalable solutions that grow with your business needs.

4. Is cloud computing suitable for small and medium-sized healthcare businesses?

A: Absolutely! Cloud solutions are flexible and scalable, making them ideal for businesses of all sizes. As a small or medium-sized healthcare provider, cloud computing allows you to access enterprise-level technology without the significant upfront costs or complex management. This allows you to compete with larger organizations while optimizing your operations.

5. How can cloud computing support my business's disaster recovery and backup plans?

A: Cloud solutions offer comprehensive backup and disaster recovery options, ensuring your data is safe and can be easily recovered during an emergency. By utilizing cloud infrastructure, you can maintain business continuity during natural disasters or technical issues, ensuring minimal downtime and data loss.

AWS business continuity and disaster recovery plan

In today’s fast-paced business environment, ensuring business continuity during disruptions is critical for long-term success.

For small and medium-sized businesses (SMBs), having an AWS business continuity and disaster recovery plan ensures that business operations remain resilient, minimize downtime, and protect sensitive data when unexpected events occur.

In this guide, we'll walk you through building a disaster recovery plan with AWS services. SMBs can learn how to assess the application’s architecture, identify potential risks, and utilize tools like EC2, RDS, and S3 to prepare the business for the unexpected.

What is AWS's business continuity and disaster recovery plan?

AWS Business Continuity and Disaster Recovery (BCDR) is a strategy designed to ensure businesses continue operating during and after disruptions.

These disruptions are usually caused by technical failures, human errors, or natural disasters that can damage physical infrastructure and disrupt communication networks. AWS enables businesses to develop a comprehensive disaster recovery strategy that ensures quick recovery, minimizes data loss, and maintains operational continuity during unforeseen disruptions.

What AWS disaster recovery strategies can businesses use to ensure continuity?

AWS's various strategies, like Backup and Restore, Pilot Light, Warm Standby, and Multi-Site Active/Active, offer distinct benefits depending on the business's requirements.

1. Backup and restore

When to Use: Ideal for non-critical workloads or businesses that can tolerate some downtime, this is the most budget-friendly option.

How It Works:

- Data is regularly backed up using Amazon EBS, Amazon DynamoDB, or Amazon RDS.

- In an outage, data is restored to new AWS instances.

2. Pilot light

When to Use: Suitable for businesses that need a minimal operational environment with faster recovery than Backup and Restore, but still want to minimize costs.

How It Works:

- Keep only the essential components of the infrastructure running in AWS.

- In the event of an outage, quickly scale up these resources to restore full capacity.

3. Warm standby

When to Use: This is ideal for businesses that need faster recovery than Pilot Light but can keep a scaled-down version of their environment operational at all times.

How It Works:

- Maintain a scaled-down version of the infrastructure in AWS.

- If disaster strikes, scale up resources quickly to meet operational needs.

4. Multi-site active/active

When to Use: Ideal for businesses that require continuous uptime and zero downtime in the event of failures. Suitable for mission-critical operations.

How It Works:

- Run workloads in multiple AWS regions simultaneously.

- Both regions handle traffic and can automatically take over if one fails.

Quick Comparison of AWS disaster recovery strategies: Pros and cons

To help you better understand the advantages and limitations of each strategy, here is a quick comparison:

A Business Continuity plan takes a broader approach. It ensures that all business functions, operations, personnel, and communications, continue running with minimal disruption during and after a crisis.

In contrast, the Disaster Recovery (DR) plan is more specific in how it addresses IT infrastructure, systems, and data recovery, which are just one component of the overall business continuity.

Why is a DR plan essential for modern businesses?

A DR plan is crucial for minimizing downtime and protecting critical IT system data. It ensures rapid data recovery, enabling businesses to maintain operations and minimize disruptions during unexpected events.

How AWS supports seamless operations during disruptions

AWS provides powerful tools to help businesses continue operating even during disruptions. Using services like Multi-AZ, Multi-Region, and automated backups can help minimize the impact on operations.

- Multi-AZ Strategy: With AWS's Multi-Availability Zone strategy, businesses can continue to operate even if one availability zone faces an outage.

- Multi-Region Support: AWS enables data to be backed up across multiple regions, providing disaster recovery across different geographic areas.

- Scalable Infrastructure: AWS's flexible cloud platform ensures that businesses can adapt to any situation and maintain optimal performance even during crises.

- Automated Backups: AWS automates backups, ensuring that data is always secure and can be restored quickly and easily.

With AWS's resilient infrastructure, businesses can stay operational during any crisis, ensuring minimal disruption and fast recovery.

Establishing clear recovery goals: RTO and RPO for effective business continuity

When crafting an AWS business continuity and disaster recovery plan, it is important to define clear Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs). These metrics determine how quickly systems must be restored and how much data loss is acceptable during disruptions. With the variety of tools and services offered by AWS, setting these objectives becomes easier and more effective for businesses.

What is the recovery time objective?

Recovery time objective (RTO) is the maximum allowable downtime for a critical service following an incident. It defines how long a business can tolerate being without a key system before operations are significantly impacted.

- Why it matters: Businesses cannot afford prolonged downtime. Defining an RTO helps prioritise the restoration of essential services.

- How AWS helps: AWS services like EC2 Auto Recovery and S3 enable fast backup and restoration, allowing businesses to meet RTO objectives and minimise downtime.

What is a recovery point objective?

Recovery point objective (RPO) is the maximum acceptable amount of data loss a business can withstand. It determines how far back in time the last backup should be to ensure minimal data loss after a failure.

- Why it matters: For many businesses, even a few hours of data loss can have significant financial and reputational consequences.

- How AWS helps: Services like Amazon RDS automated backups and Amazon DynamoDB help businesses back up data regularly, making it easier to meet RPO goals and safeguard against data loss.

Aligning RTO and RPO with business objectives

Defining RTO and RPO in alignment with business needs ensures that an AWS disaster recovery plan supports operational objectives. Here's how businesses can align them effectively:

- Identify critical workloads: Businesses must understand their most critical operations and set an RTO to prioritize them for recovery.

- Balance costs and recovery needs: AWS offers various disaster recovery strategies, such as Backup and Restore, Pilot Light, or Multi-Site Active/Active. Businesses should choose the right strategy based on their RTO and RPO goals and budget.

- Ensure smooth operations: Setting realistic RTO and RPO goals prevents over-investment in resources while maintaining a resilient business continuity strategy.

- Minimize business impact: A well-defined RTO reduces operational disruption, while a suitable RPO ensures data protection, helping businesses maintain customer trust and continuity.

How can disaster recovery be integrated into business continuity planning?

Disaster recovery can be integrated into business continuity planning using AWS tools to automate data backups, ensure quick recovery, and maintain critical operations. This enables seamless transitions during disruptions, ensuring minimal downtime and business resilience.

- Define RTO: To ensure timely recovery, set the maximum allowable downtime for each critical function.

- Define RPO: Determine the maximum acceptable data loss to guide backup strategies and minimize disruption.

- Automate backup and recovery procedures: Use tools like AWS Backup, AWS Lambda, and AWS CloudFormation to automate backups and recovery processes. This ensures consistency, reduces human error, and speeds up recovery.

- Establish redundancy across locations: Create redundant systems using AWS multi-region or multi-availability zone architectures. This ensures business-critical applications remain operational even if one location fails.

- Regular DR drills and testing: Test the DR plan through simulated disaster recovery drills. This helps identify weaknesses and ensures the team is prepared to respond quickly.

- Data replication and failover: Implement data replication (e.g., AWS Elastic Disaster Recovery, Cross-Region Replication) for critical systems. This will enable quick failover during a disaster, minimizing downtime and data loss.

- Clear communication plans: During a disaster, ensure clear communication with both internal teams and external stakeholders. Predefined channels and protocols help ensure everyone is aligned and informed throughout recovery.

- Prioritize systems based on criticality: Identify which systems are most critical to the business and prioritize them for recovery. Not all systems need to be restored simultaneously, and prioritization ensures that resources are allocated efficiently during recovery.

While a disaster recovery plan is crucial, businesses often face challenges in implementing and maintaining it.

How can you overcome challenges in AWS disaster recovery?

Implementing an AWS disaster recovery (DR) strategy often presents challenges, including budget constraints, setup complexity, and resource management. However, with the right tools and strategies, SMBs can efficiently overcome these obstacles.

1. Budget constraints

AWS's pay-as-you-go model helps businesses control costs by only charging for the resources used. Services like Amazon S3 and EC2 allow businesses to scale their disaster recovery solutions without hefty upfront costs. Additionally, AWS Storage Gateway offers a cost-effective way to integrate on-premise data with cloud storage for disaster recovery.

2. Setup complexity

AWS simplifies setup with tools like AWS CloudFormation, which automates infrastructure deployment, and AWS Elastic Disaster Recovery (AWS DRS), which handles data replication and failover tasks. These services reduce manual effort and simplify the process.

3. Resource management

AWS Auto Scaling adjusts resources based on demand, preventing over-provisioning and reducing costs. AWS Trusted Advisor offers insights on cost optimization and resource efficiency, helping businesses manage their recovery environment effectively.

Wrapping up

Implementing an AWS Business Continuity and Disaster Recovery plan is essential for SMBs aiming to minimize downtime and safeguard critical data. Without such a strategy, businesses expose themselves to prolonged outages and potential data loss in the event of unforeseen events. AWS provides a reliable framework that addresses these challenges, enabling businesses to maintain resilience and continuity in their operations.

Cloudtech, an AWS Advanced Tier Partner, specializes in delivering tailored AWS disaster recovery solutions for SMBs. Their services encompass automated failovers, real-time backups, and multi-region resilience, ensuring minimal downtime and data integrity.

With expertise in AWS architecture, Cloudtech helps businesses design and deploy efficient disaster recovery strategies, enabling them to focus on growth while ensuring operational resilience.

Ready to fortify your business against disruptions? Talk to Cloudtech today to implement a AWS disaster recovery plan tailored to your needs.

FAQs

1. Why is AWS considered a good option for disaster recovery?

AWS offers a highly scalable, reliable, and cost-effective cloud platform that can automatically replicate critical business data and applications across multiple regions. With services like Amazon S3, EC2, and RDS, AWS ensures that businesses can restore their operations quickly, minimizing downtime during unexpected disruptions.

2. What role does data security play in disaster recovery with AWS?

Data security is crucial in disaster recovery as businesses must ensure their data is protected during normal operations and recovery situations. AWS provides encryption at rest and in transit, ensuring that data remains secure while being backed up and restored across its cloud infrastructure.

3. How can SMBs ensure minimal downtime during a disaster recovery event?

To minimize downtime, SMBs should implement strategies like AWS Multi-AZ or Multi-Region deployment, where their applications are replicated across different geographical locations. This allows the business to quickly fail over to a secondary site if the primary site experiences an outage, significantly reducing downtime.

4. What are the best practices for maintaining an effective AWS disaster recovery plan?

Best practices include regular testing of disaster recovery plans, setting clear Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO), automating backups, and continuously monitoring recovery processes. Additionally, businesses should ensure that their recovery architecture is scalable and flexible to meet changing demands.

5. How does AWS disaster recovery help businesses recover from natural disasters?

AWS disaster recovery solutions, such as cross-region replication, allow businesses to recover quickly from natural disasters by providing a backup site in a geographically separate location. This ensures that even if one region is impacted, businesses can fail over to another area and continue their operations with minimal disruption.

5 Benefits of Data Lakes for Small Businesses

Data is becoming one of the most valuable business assets for small and medium-sized businesses, but only if you know how to use it.

According to Forbes, 95% of businesses struggle with unstructured data, and Forrester reports that 73% of enterprise data goes unused for analytics. It's no wonder that 94% of leaders say they need to extract more value from the data they already have.

Data lakes offer a solution to this. They centralize all your business data, regardless of format, into one scalable, accessible storage layer. Whether it's CRM records, sales reports, customer feedback, or even social media mentions, data lakes turn scattered information into a powerful decision-making tool.

What is a Data Lake?

A data lake is a central repository that stores vast amounts of raw data, both structured (CRM records, sales figures, or Excel spreadsheets) and unstructured (emails, PDFs, images, or social media posts). Unlike traditional databases, it doesn't require data to be cleaned or organized before storage, saving time and costs.

How Does a Data Lake Work?

- Data Ingestion: Your raw data (structured and unstructured) is pulled in from multiple sources. These could be your website, POS system, social media, or third-party APIs.

- Storage in Raw Format: This data is stored as-is in the data lake, without the need for immediate cleaning or formatting. Think of it as dumping everything into one central, scalable pool, usually in cloud platforms like AWS S3.

- Data Cataloging & Indexing: Metadata (data about your data) is created to help organize and classify everything. This step ensures that users can easily search and retrieve relevant datasets when needed.

- Data Processing & Transformation: When you’re ready to analyze, tools like AWS Glue or Amazon EMR process and transform the data into usable formats, cleaning, filtering, or reshaping it based on your specific needs.

- Analytics & Insights: Once processed, the data is fed into analytics tools (like Amazon QuickSight or Power BI) for dashboards, reports, or machine learning models, powering smarter, data-driven decisions.

- Access Control & Governance: Throughout, access is managed with permission settings and compliance protocols, so only the right people can access the right data, keeping everything secure and audit-ready.

As small businesses look to manage these growing volumes of data, two solutions often come up—data lakes and data warehouses. While both store data, they serve different purposes. Understanding these differences upfront is important for your business needs and future scalability.

How to Differentiate a Data Lake from a Data Warehouse?

A data warehouse stores data that is highly organized and structured for quick analysis. It requires predefined schemas, meaning you must know in advance how the data will be used before storing it.

On the other hand, a data lake stores data in its raw, unstructured format, offering much more flexibility. This raw data can later be transformed and used for various purposes, such as machine learning or business intelligence.

The ability to store data without needing to define its structure upfront makes a data lake a more adaptable solution for small businesses that handle diverse types of data.

5 Key Benefits of Data Lakes for SMBs

When it comes to managing data, small businesses need solutions that are both affordable and flexible. A data lake provides just that, offering significant advantages to SMBs. Here are the key benefits that make data lakes a valuable investment for your business:

1. Cost Efficiency

One of the major advantages of data lakes is their cost-efficiency. Data lakes allow you to store raw, unprocessed data, eliminating the need for expensive data transformation upfront. This helps reduce both storage and ongoing maintenance costs.

Cloud-native platforms like Amazon S3, often used in data lake setups, follow pay-as-you-go pricing, charging as little as $0.023 per GB per month for standard storage. Azure Data Lake Storage offers similar models at around $0.03 per GB per month.

With no upfront infrastructure costs, SMBs can build scalable, high-performance data lakes while keeping budgets predictable. Companies like Cloudtech help SMBs make the most of this model, architecting efficient data lakes on AWS that scale with your business and avoid unnecessary spend.

2. Scalability

As your business grows, so does your data. A data lake for small businesses can scale easily to accommodate large volumes of data without a complete infrastructure overhaul. You can add more storage as needed without worrying about complex data migrations or reconfigurations, making it ideal for growing businesses with expanding data needs.

3. Flexibility

Data lakes support a wide variety of data types, including structured, semi-structured, and unstructured data. This means you can store everything from transactional data to text files, emails, and social media content all in one place. With this flexibility, you can apply diverse analytics and reporting techniques, allowing you to uncover insights from every corner of your business.

4. Improved Data Accessibility

With a data lake, all your data is stored in one central location, making it easy to access and manage. Whether you're analyzing sales performance, customer feedback, or operational data, you can retrieve all the information you need without hunting through different systems or platforms. This ease of access can significantly improve decision-making and streamline your business processes.

5. Future-Proofing

A data lake for small businesses doesn't just solve today's data management challenges – it prepares you for the future. With the ability to integrate advanced technologies like AI, machine learning, and predictive analytics, a data lake ensures your business is ready for tomorrow's innovations. As you evolve, your data lake can adapt to your changing needs, keeping you ahead of the curve.

With these benefits, it’s no surprise that more SMBs are investing in data lakes to stay agile, competitive, and data-driven. While a data lake offers tremendous potential for small businesses, it also presents certain challenges that need careful consideration.

What are the Challenges of Data Lakes?

The complexity of managing large volumes of data, ensuring data quality, maintaining security, and meeting compliance standards can overwhelm SMBs without the right approach. These factors are critical to unlocking the full value of a data lake, and neglecting them can result in ineffective data management, increased risks, and missed opportunities.

- Data Governance: Organizing and cataloguing your data is crucial for maintaining its quality and accessibility. Without proper management, data can become difficult to analyze and prone to errors.

- Security and Compliance: With sensitive data often stored in data lakes, strong security measures like encryption and access control are essential. Regular audits are also needed to ensure compliance with industry regulations.

- Assessing Data Needs: Before adopting a data lake, evaluate the volume and variety of data your business generates. A well-aligned data lake will support long-term growth and scalability.

To address these challenges, selecting the right tools and technologies is essential for SMBs to effectively manage and maximize the potential of their data lake.

Tools and Technologies for Data Lakes in SMBs

To make the most of a data lake, small businesses need the right tools and technologies to simplify data management and drive valuable insights. Here are some key tools that can support your data lake strategy:

- Cloud-Based Solutions: Platforms like AWS offer affordable, scalable cloud solutions for small businesses. These platforms allow you to store and manage large amounts of data with flexibility without the need for physical infrastructure. They also ensure security and compliance to protect sensitive data.

- Data Orchestration Tools: Tools like AWS Glue and Amazon EMR help streamline data management by integrating, cleaning, and transforming data from multiple sources. These user-friendly tools save time on manual processes and ensure your data is ready for analysis.

But tools alone aren’t enough. The data lake also needs to work in harmony with the systems you already use.

Integration with Existing Systems

One of the biggest advantages of a data lake for small businesses is its ability to integrate seamlessly with existing systems. Data lakes can easily connect with web APIs, relational databases, and other tools your business already uses. This integration enables a smooth data flow across systems, ensuring consistency and real-time data access.

With partners like Cloudtech, SMBs can ensure their data lake integrates smoothly with existing business systems, avoiding silos and unlocking unified, real-time data access. Whether you’re using CRM software, marketing tools, or other business applications, a data lake can centralize and streamline your data management.

Conclusion

Handling data efficiently is often a struggle for small businesses, especially when it's scattered across different systems. Without a unified system, accessing and making sense of that data becomes time-consuming and challenging. A data lake for small businesses solves this problem by centralizing your data, making it easier to analyze and use for better decision-making. This approach can help you identify trends, improve operations, and ultimately save time and money.

Cloudtech specializes in helping small businesses manage data more effectively with tailored data lake solutions. Their team works closely with you to create a system that streamlines data storage, access, and analysis, driving better insights and business growth.

Ready to simplify your data management? Reach out to Cloudtech today and discover how their data lake solutions can support your business goals.

FAQs

- How does a data lake improve collaboration within my business?

A data lake centralizes data from various departments, making it easier for teams across your business to access and collaborate on insights. With all your data in one place, your employees can make informed decisions, leading to better teamwork and streamlined processes.

- What are the security risks of using a data lake, and how can I mitigate them?

Data lakes store large volumes of sensitive data, which can pose security risks. To mitigate these, ensure robust encryption, access controls, and regular audits are in place. Working with cloud providers that offer built-in security features can also help protect your data.

- Can a data lake help with data privacy compliance?

Yes, a data lake can be configured to meet various data privacy regulations, such as GDPR and CCPA. With proper governance and security protocols, SMBs can ensure that sensitive data is handled and stored in compliance with relevant laws.

- How long does it take to implement a data lake for a small business?

The time required to implement a data lake depends on the complexity of your data and existing systems. With cloud-based solutions and proper planning, small businesses can typically implement a data lake in a few months, making it a scalable, long-term solution.

Supercharge Your Data Architecture with the Latest AWS Step Functions Integrations

In the rapidly evolving cloud computing landscape, AWS Step Functions has emerged as a cornerstone for developers looking to orchestrate complex, distributed applications seamlessly in serverless implementations. The recent expansion of AWS SDK integrations marks a significant milestone, introducing support for 33 additional AWS services, including cutting-edge tools like Amazon Q, AWS B2B Data Interchange, AWS Bedrock, Amazon Neptune, and Amazon CloudFront KeyValueStore, etc. This enhancement not only broadens the horizon for application development but also opens new avenues for serverless data processing.

Serverless computing has revolutionized the way we build and scale applications, offering a way to execute code in response to events without the need to manage the underlying infrastructure. With the latest updates to AWS Step Functions, developers now have at their disposal a more extensive toolkit for creating serverless workflows that are not only scalable but also cost-efficient and less prone to errors.

In this blog, we will delve into the benefits and practical applications of these new integrations, with a special focus on serverless data processing. Whether you're managing massive datasets, streamlining business processes, or building real-time analytics solutions, the enhanced capabilities of AWS Step Functions can help you achieve more with less code. By leveraging these integrations, you can create workflows that directly invoke over 11,000+ API actions from more than 220 AWS services, simplifying the architecture and accelerating development cycles.

Practical Applications in Data Processing:

This AWS SDK integration with 33 new services not only broadens the scope of potential applications within the AWS ecosystem but also streamlines the execution of a wide range of data processing tasks. These integrations empower businesses with automated AI-driven data processing, streamlined EDI document handling, and enhanced content delivery performance.

Amazon Q Integration: Amazon Q is a generative AI-powered enterprise chat assistant designed to enhance employee productivity in various business operations. The integration of Amazon Q with AWS Step Functions enhances workflow automation by leveraging AI-driven data processing. This integration allows for efficient knowledge discovery, summarization, and content generation across various business operations. It enables quick and intuitive data analysis and visualization, particularly beneficial for business intelligence. In customer service, it provides real-time, data-driven solutions, improving efficiency and accuracy. It also offers insightful responses to complex queries, facilitating data-informed decision-making.

AWS B2B Data Interchange: Integrating AWS B2B Data Interchange with AWS Step Functions streamlines and automates electronic data interchange (EDI) document processing in business workflows. This integration allows for efficient handling of transactions including order fulfillment and claims processing. The low-code approach simplifies EDI onboarding, enabling businesses to utilize processed data in applications and analytics quickly. This results in improved management of trading partner relationships and real-time integration with data lakes, enhancing data accessibility for analysis. The detailed logging feature aids in error detection and provides valuable transaction insights, essential for managing business disruptions and risks.

Amazon CloudFront KeyValueStore: This integration enhances content delivery networks by providing fast, reliable access to data across global networks. It's particularly beneficial for businesses that require quick access to large volumes of data distributed worldwide, ensuring that the data is always available where and when it's needed.

Neptune Data: This integration allows the Processing of graph data in a serverless environment, ideal for applications that require complex relationships and data patterns like social networks, recommendation engines, and knowledge graphs. For instance, Step Functions can orchestrate a series of tasks that ingest data into Neptune, execute graph queries, analyze the results, and then trigger other services based on those results, such as updating a dashboard or triggering alerts.

Amazon Timestream Query & Write: The integration is useful in serverless architectures for analyzing high-volume time-series data in real-time, such as sensor data, application logs, and financial transactions. Step Functions can manage the flow of data from ingestion (using Timestream Write) to analysis (using Timestream Query), including data transformation, anomaly detection, and triggering actions based on analytical insights.

Amazon Bedrock & Bedrock Runtime: AWS Step Functions can orchestrate complex data streaming and processing pipelines that ingest data in real-time, perform transformations, and route data to various analytics tools or storage systems. Step Functions can manage the flow of data across different Bedrock tasks, handling error retries, and parallel processing efficiently

AWS Elemental MediaPackage V2: Step Functions can orchestrate video processing workflows that package, encrypt, and deliver video content, including invoking MediaPackage V2 actions to prepare video streams, monitoring encoding jobs, and updating databases or notification systems upon completion.

AWS Data Exports: With Step Functions, you can sequence tasks such as triggering data export actions, monitoring their progress, and executing subsequent data processing or notification steps upon completion. It can automate data export workflows that aggregate data from various sources, transform it, and then export it to a data lake or warehouse.

Benefits of the New Integrations

The recent integrations within AWS Step Functions bring forth a multitude of benefits that collectively enhance the efficiency, scalability, and reliability of data processing and workflow management systems. These advancements simplify the architectural complexity, reduce the necessity for custom code, and ensure cost efficiency, thereby addressing some of the most pressing challenges in modern data processing practices. Here's a summary of the key benefits:

Simplified Architecture: The new service integrations streamline the architecture of data processing systems, reducing the need for complex orchestration and manual intervention.

Reduced Code Requirement: With a broader range of integrations, less custom code is needed, facilitating faster deployment, lower development costs, and reduced error rates.

Cost Efficiency: By optimizing workflows and reducing the need for additional resources or complex infrastructure, these integrations can lead to significant cost savings.

Enhanced Scalability: The integrations allow systems to easily scale, accommodating increasing data loads and complex processing requirements without the need for extensive reconfiguration.

Improved Data Management: These integrations offer better control and management of data flows, enabling more efficient data processing, storage, and retrieval.

Increased Flexibility: With a wide range of services now integrated with AWS Step Functions, businesses have more options to tailor their workflows to specific needs, increasing overall system flexibility.

Faster Time-to-Insight: The streamlined processes enabled by these integrations allow for quicker data processing, leading to faster time-to-insight and decision-making.

Enhanced Security and Compliance: Integrating with AWS services ensures adherence to high security and compliance standards, which is essential for sensitive data processing and regulatory requirements.

Easier Integration with Existing Systems: These new integrations make it simpler to connect AWS Step Functions with existing systems and services, allowing for smoother digital transformation initiatives.

Global Reach: Services like Amazon CloudFront KeyValueStore enhance global data accessibility, ensuring high performance across geographical locations.

As businesses continue to navigate the challenges of digital transformation, these new AWS Step Functions integrations offer powerful solutions to streamline operations, enhance data processing capabilities, and drive innovation. At Cloudtech, we specialize in serverless data processing and event-driven architectures. Contact us today and ask how you can realize the benefits of these new AWS Step Functions integrations in your data architecture.

Revolutionize Your Search Engine with Amazon Personalize and Amazon OpenSearch Service

In today's digital landscape, user experience is paramount, and search engines play a pivotal role in shaping it. Imagine a world where your search engine not only understands your preferences and needs but anticipates them, delivering results that resonate with you on a personal level. This transformative user experience is made possible by the fusion of Amazon Personalize and Amazon OpenSearch Service.

Understanding Amazon Personalize

Amazon Personalize is a fully-managed machine learning service that empowers businesses to develop and deploy personalized recommendation systems, search engines, and content recommendation engines. It is part of the AWS suite of services and can be seamlessly integrated into web applications, mobile apps, and other digital platforms.

Key components and features of Amazon Personalize include:

Datasets: Users can import their own data, including user interaction data, item data, and demographic data, to train the machine learning models.

Recipes: Recipes are predefined machine learning algorithms and models that are designed for specific use cases, such as personalized product recommendations, personalized search results, or content recommendations.

Customization: Users have the flexibility to fine-tune and customize their machine learning models, allowing them to align the recommendations with their specific business goals and user preferences.

Real-Time Recommendations: Amazon Personalize can generate real-time recommendations for users based on their current behavior and interactions.

Batch Recommendations: Businesses can also generate batch recommendations for users, making it suitable for email campaigns, content recommendations, and more.

Benefits of Amazon Personalize

Amazon Personalize offers a range of benefits for businesses looking to enhance user experiences and drive engagement.

Improved User Engagement: By providing users with personalized content and recommendations, Amazon Personalize can significantly increase user engagement rates.

Higher Conversion Rates: Personalized recommendations often lead to higher conversion rates, as users are more likely to make purchases or engage with desired actions when presented with items or content tailored to their preferences.

Enhanced User Satisfaction: Personalization makes users feel understood and valued, leading to improved satisfaction with your platform. Satisfied users are more likely to become loyal customers.

Better Click-Through Rates (CTR): Personalized recommendations and search results can drive higher CTR as users are drawn to content that aligns with their interests, increasing their likelihood of clicking through to explore further.

Increased Revenue: The improved user engagement and conversion rates driven by Amazon Personalize can help cross-sell and upsell products or services effectively.

Efficient Content Discovery: Users can easily discover relevant content, products, or services, reducing the time and effort required to find what they are looking for.

Data-Driven Decision Making: Amazon Personalize provides valuable insights into user behavior and preferences, enabling businesses to make data-driven decisions and optimize their offerings.

Scalability: As an AWS service, Amazon Personalize is highly-scalable and can accommodate businesses of all sizes, from startups to large enterprises.

Understanding Amazon OpenSearch Service

Amazon OpenSearch Service is a fully managed, open-source search and analytics engine developed to provide fast, scalable, and highly-relevant search results and analytics capabilities. It is based on the open-source Elasticsearch and Kibana projects and is designed to efficiently index, store, and search through vast amounts of data.

Benefits of Amazon OpenSearch Service in Search Enhancement

Amazon OpenSearch Service enhances search functionality in several ways:

High-Performance Search: OpenSearch Service enables organizations to rapidly execute complex queries on large datasets to deliver a responsive and seamless search experience.

Scalability: OpenSearch Service is designed to be horizontally scalable, allowing organizations to expand their search clusters as data and query loads increase, ensuring consistent search performance.

Relevance and Ranking: OpenSearch Service allows developers to customize ranking algorithms to ensure that the most relevant search results are presented to users.

Full-Text Search: OpenSearch Service excels in full-text search, making it well-suited for applications that require searching through text-heavy content such as documents, articles, logs, and more. It supports advanced text analysis and search features, including stemming and synonym matching.

Faceted Search: OpenSearch Service supports faceted search, enabling users to filter search results based on various attributes, categories, or metadata.

Analytics and Insights: Beyond search, OpenSearch Service offers analytics capabilities, allowing organizations to gain valuable insights into user behavior, query performance, and data trends to inform data-driven decisions and optimizations.

Security: OpenSearch Service offers access control, encryption, and authentication mechanisms to safeguard sensitive data and ensure secure search operations.

Open-Source Compatibility: While Amazon OpenSearch Service is a managed service, it remains compatible with open-source Elasticsearch, ensuring that organizations can leverage their existing Elasticsearch skills and applications.

Integration Flexibility: OpenSearch Service can seamlessly integrate with various AWS services and third-party tools, enabling organizations to ingest data from multiple sources and build comprehensive search solutions.

Managed Service: Amazon OpenSearch Service is a fully-managed service, which means AWS handles the operational aspects, such as cluster provisioning, maintenance, and scaling, allowing organizations to focus on developing applications and improving user experiences.

Amazon Personalize and Amazon OpenSearch Service Integration

When you use Amazon Personalize with Amazon OpenSearch Service, Amazon Personalize re-ranks OpenSearch Service results based on a user's past behavior, any metadata about the items, and any metadata about the user. OpenSearch Service then incorporates the re-ranking before returning the search response to your application. You control how much weight OpenSearch Service gives the ranking from Amazon Personalize when applying it to OpenSearch Service results.

With this re-ranking, results can be more engaging and relevant to a user's interests. This can lead to an increase in the click-through rate and conversion rate for your application. For example, you might have an ecommerce application that sells cars. If your user enters a query for Toyota cars and you don't personalize results, OpenSearch Service would return a list of cars made by Toyota based on keywords in your data. This list would be ranked in the same order for all users. However, if you were to use Amazon Personalize, OpenSearch Service would re-rank these cars in order of relevance for the specific user based on their behavior so that the car that the user is most likely to click is ranked first.

When you personalize OpenSearch Service results, you control how much weight (emphasis) OpenSearch Service gives the ranking from Amazon Personalize to deliver the most relevant results. For instance, if a user searches for a specific type of car from a specific year (such as a 2008 Toyota Prius), you might want to put more emphasis on the original ranking from OpenSearch Service than from Personalize. However, for more generic queries that result in a wide range of results (such as a search for all Toyota vehicles), you might put a high emphasis on personalization. This way, the cars at the top of the list are more relevant to the particular user.

How the Amazon Personalize Search Ranking plugin works

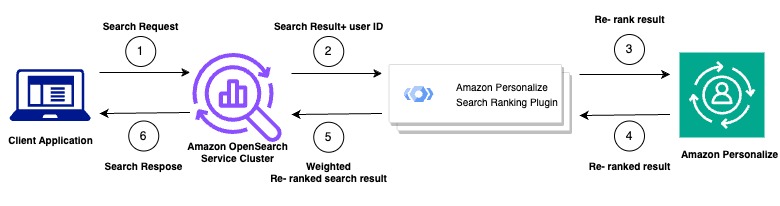

The following diagram shows how the Amazon Personalize Search Ranking plugin works.

- You submit your customer's query to your Amazon OpenSearch Service Cluster

- OpenSearch Service sends the query response and the user's ID to the Amazon Personalize search ranking plugin.

- The plugin sends the items and user information to your Amazon Personalize campaign for ranking. It uses the recipe and campaign Amazon Resource Name (ARN) values within your search process to generate a personalized ranking for the user. This is done using the GetPersonalizedRanking API operation for recommendations. The user's ID and the items obtained from the OpenSearch Service query are included in the request.

- Amazon Personalize returns the re-ranked results to the plugin.

- The plugin organizes and returns these search results to your OpenSearch Service cluster. It re-ranks the results based on the feedback from your Amazon Personalize campaign and the emphasis on personalization that you've defined during setup.

- Finally, your OpenSearch Service cluster sends the finalized results back to your application.

Benefits of Amazon Personalize and Amazon OpenSearch Service Integration

Combining Amazon Personalize and Amazon OpenSearch Service maximizes user satisfaction through highly personalized search experiences:

Enhanced Relevance: The integration ensures that search results are tailored precisely to individual user preferences and behavior. Users are more likely to find what they are looking for quickly, resulting in a higher level of satisfaction.

Personalized Recommendations: Amazon Personalize's machine learning capabilities enable the generation of personalized recommendations within search results. This feature exposes users to items or content they may not have discovered otherwise, enriching their search experience.

User-Centric Experience: Personalized search results demonstrate that your platform understands and caters to each user's unique needs and preferences. This fosters a sense of appreciation and enhances user satisfaction.

Time Efficiency: Users can efficiently discover relevant content or products, saving time and effort in the search process.

Reduced Information Overload: Personalized search results also filter out irrelevant items to reduce information overload, making decision-making easier and more enjoyable.

Increased Engagement: Users are more likely to engage with content or products that resonate with their interests, leading to longer session durations and a greater likelihood of conversions.

Conclusion

Integrating Amazon Personalize and Amazon OpenSearch Service transforms user experiences, drives user engagement, and unlocks new growth opportunities for your platform or application. By embracing this innovative combination and encouraging its adoption, you can lead the way in delivering exceptional personalized search experiences in the digital age.

%2520dark.jpeg)

How does AWS TCO Analysis work?

Irrespective of your business size, you can’t ignore the value assessment of any product or service you plan to purchase. The right investment in the right system, process, and infrastructure is essential for success in your business. And how can you make the right financial decisions and understand whether “X” product/service is generating value or not for your business?

Total Cost of Ownership or TCO analysis is one method that can help you in this situation, especially if you are planning to analyze these costs on the cloud. Amazon’s AWS is a leading public cloud platform offering over 200 fully featured services, including AWS TCO analysis -a service to analyze the total costs of an asset or infrastructure on the cloud. It offers services to diverse customers – startups, government agencies, and the largest enterprises. Its agility, innovation, safety, and several data centers make it comprehensive and adaptable.

Read on to learn more about the TCO analysis and how AWS TCO analysis works.

What is TCO analysis?

As the name suggests, TCO estimates costs associated with purchasing, deploying, operating, and maintaining any asset. The asset could be physical or virtual products, services, or tools. The TCO analysis’s primary purpose is to assess the asset’s cost throughout its life cycle and to determine the return on investment.

Regarding the IT industry, TCO analysis consists of costs related to hardware/software acquisition, end-user expenses, training, network, servers, and communications. According to Gartner, “TCO is a comprehensive assessment of IT or other costs across enterprise boundaries over time.”

TCO analysis in Cloud

The adoption of cloud computing in business also raises the trend of TCO analysis on the cloud. You can call it, cloud TCO analysis, which performs the same job on the cloud. TCO analysis in the cloud calculated the total costs of adopting, executing, and provisioning cloud infrastructure. When you are planning to migrate to the cloud, this analysis helps you to weigh the current costs and cloud adoption costs. Not only Amazon, but other big tech giants, including Microsoft, Google, IBM, and many more, offering TCO analysis in the cloud. But, Amazon’s AWS is the number one cloud service provider to offer cloud services.

Why do businesses need AWS TCO analysis?

A TCO analysis helps to know whether there will be profit or loss.

Let’s understand it with an example showing how AWS TCO analysis helped the company increase its profit. The top OTT platform, Netflix, invested $9.6 million per month in AWS Cost in 2019, which would increase by 2023. According to this resource, it would be around $27.78 million per month. The biggest reason behind this investment is the profit, and AWS TCO analysis is helping them to know how this profit is happening. AWS helped Netflix to get a cost-effective and scalable cloud architecture horizontally. It also enabled the company to focus on its core business – video streaming services. You all know that Netflix is the favorite video streaming platform globally.

In another example, delaying the decision of TCO analysis ignorance resulted in a loss. According to this report on 5GC, TCO analysis has been done regarding the adoption of the 5G core. It has been found that postponing increases the TCO over five years. It indicates the losses occurred due to ignorance of TCO analysis.

These examples show that your business needs both TCO analysis and cloud infrastructure. A lack of TCO analysis might cause incorrect IT budget calculations or purchasing of inappropriate resources. It might result in problems like downtime and slower business operations. You can understand that the TCO analysis is a critical business operation. Its ignorance directly impacts financial decisions. Thus, know this and utilize AWS TCO analysis for your business success.

How does AWS TCO Analysis work?

AWS TCO analysis refers to calculating the direct and indirect costs associated with migrating, hosting, running, and maintaining IT infrastructure on the AWS cloud. It assesses all the costs of using AWS resources and compares the outcome to the TCO of an alternative cloud or on-premises platform.

AWS TCO Analysis is not a calculation of one resource or a one-step process. To understand how it works, you need to know the costs of your current IT infrastructure, understand cost factors, and how to optimize cloud costs when you deploy and manage scalable web applications or infrastructure hosted on-premises versus when you deploy them on the cloud.

Here are steps to help you understand how AWS TCO analysis works:

Preliminary steps – Know the current value and build a strategy

Step 1 – Evaluate your existing infrastructure/ web application cost

You must calculate and analyze the direct and indirect costs of your existing on-premise IT infrastructure. Perform the TCO analysis of this infrastructure, including various components.

- Physical & virtual servers: They are the main pillars in developing the infrastructure

- Storage mediums: Cost of database, disks, or other storage devices

- Software & Applications: The analysis finds the cost of software and its constant upgrades. It also estimates the costs of acquiring licenses, subscriptions, loyalties, and vendor fees

- Data centers: The analysis needs to check the costs of all linked equipment such as physical space, power, cooking, and racks with the data centers

- Human Capital: Trainers, consultants, and people who run setups.

- Networking & Security system: Find out the costs of these critical components

Don’t limit yourself to estimating only direct/indirect costs. Find out any hidden costs that might happen due to unplanned events like downtime and opportunity costs, which might be helpful in the future.

Step 2 – Build an appropriate cloud migration strategy

You must choose an appropriate AWS cloud migration strategy before calculating monthly AWS costs. Amazon offers many TCO analysis migration tools, such as CloudChomp CC Analyzer, Cloudamize, Migration Evaluators, etc., from AWS and AWS partners. It can help you to evaluate the existing environment, determine workloads, and plan the AWS migration. It provides excellent insights regarding the costs, which can help you to make quick and effective decisions for migration to AWS.

Primary step – Estimate AWS Cost

Know these cost factors

All industries have different objectives and business operations. Thus, their cost analysis differs according to AWS services, workloads, servers, or methods of purchasing other AWS resources., the cost depends on the working usage of services and resources.

Still, you must consider the following factors directly impacting your AWS costs.

- Services you utilize: AWS offers various computing services, resources, and instances with hourly charges. It will bill you from when you launch any resource/instance until termination. You will get other options to use predetermined set costs for making reservations.

- Data Transfer: AWS charges for aggregated outbound data transfer across services according to a set rate. AWS does not charge for inbound or inter-service data transfer within a specific region. Still, you must check data transfer costs before launching.

- Storage: AWS charges for each GB of data storage. As per consumed storage classes, you need to understand the cost analysis. Remember that cold and hot storage options are available, but hot storage is expensive and accessible.

- Resource consumption model: You get options to consume resources. Such as on-demand instances, reserved instances that give discounted prepay options for on-demand instances, and AWS saving plans.

Know how to use AWS Pricing Calculator

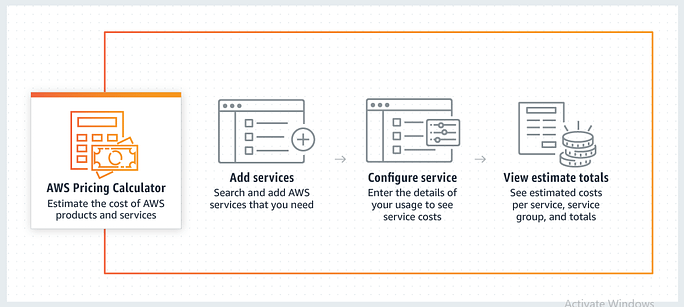

Once you analyze your compute resources and infrastructure to deploy, understand these factors, and decide on necessary AWS resources, you need to use AWS Pricing Calculator for expected cost estimation. This tool helps determine the total ownership cost. It is a web service that is freely available to end-users. It permits you to explore services according to need and estimate costs.

Look at the below image to see how this calculator works. You have to add required services, configure them by providing details, and see the generated costs.

Credit: Amazon AWS Pricing Calculator

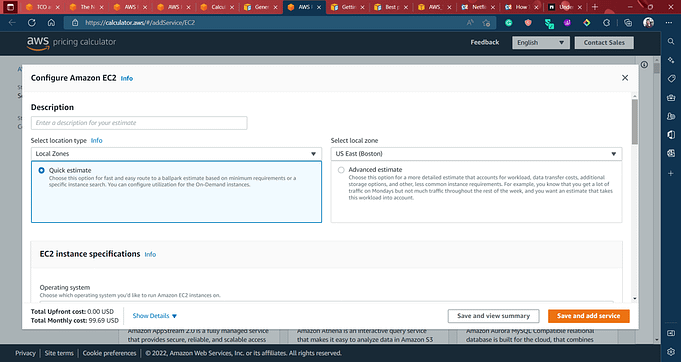

You can easily add the prices according to a group of services or individual services. After adding to the calculator, check the following snap-shot of the configuration service (EC2 service). You have to provide all required information such as location type, operating system, instance type, memory, pricing models, storage, and many more.

Credit: Amazon AWS Pricing Calculator

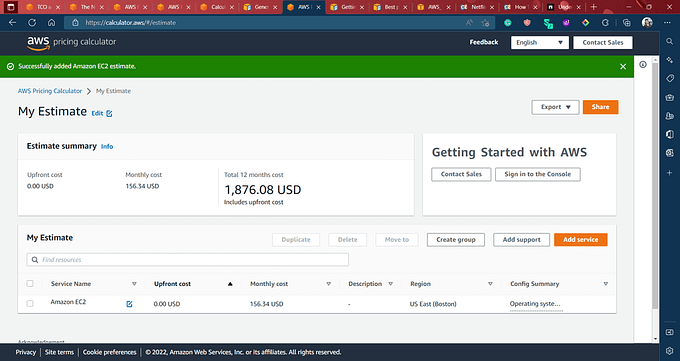

The best part is that you can download and share the results for further analysis. The following image is a dummy report to know that you can estimate the monthly cost, budget, and other factors with this summary.

Credit: Amazon AWS Pricing Calculator

Note: Check this link to know various factors for pricing assumptions.

Know how to optimize cloud costs on AWS

Calculation on AWS is not sufficient; you need to optimize your cost estimation. AWS offers various cost optimization options to manage, monitor, and optimize costs. Here are some tools you can utilize to optimize your costs on AWS:

Tool nameKey CharacteristicsAWS Trusted Advisor

- Get recommendations from this tool to follow the AWS best practices to improve performance, security, & fault tolerance

- Can help you to optimize your cloud deployment through context-driven recommendation

AWS Cost Explorer

- Provide you with an interface to check, visualize, and manage AWS costs and usages over time

- Features like filtering, grouping, and reporting can help you to manage costs efficiently

AWS Budgets

- Use this tool to track your costs and improve them for better budget planning and controlling

- You can also create custom actions that help prevent overages, inefficient resource usage, or lack of coverage

AWS Costs & Usages Report

- Leverage this tool to track your savings, costs, and cost drivers.

- You can easily integrate this report with an analytics report to get deep analysis

- It can help you to learn cost anomalies and trends in your bills

How Airbnb used AWS Cost & Usage Report for AWS cost optimization

A community marketplace, Airbnb, based in San Francisco founded in 2008. The community has over 7 million accommodations and over 40,000 customers. In 2016, Airbnb decided to migrate all operations to AWS to scale their infrastructure automatically. It worked, and in just 3 years, the company grew significantly and reduced its expenses through different AWS services (Amazon EC2, Amazon S3, Amazon EMR, etc). In 2021, the company utilized the tools, AWS cost & usage report, saving plans, and actional data to optimize their AWS costs. The outcome: 27% reduced storage costs; 60% reduced Amazon Open Search Service cost.

The company has developed a customized cost and usage data tool through AWS services. It is helping them to reduce costs and deliver actional business metrics.

Final Stage: Avoid these mistakes

Often, businesses make mistakes like misconfiguration, choosing the wrong resource, etc., leading to increased costs. Check the following points to avoid mistakes:

- Never create or set up cloud resources without using auto-scaling options or other monitoring tools. It happens during Dev/test environments mostly.

- Take care while configuring storage resources, classes, and data types. Often, misconfiguration happens during storage tiers usage, such as Simple Storage Service (S3).

- Avoid over-provisioned resources by properly consolidating them. You must know the concept of right-sizing to find the perfect match between instance types, sizes, and capacity requirements at a minimal cost.

- Choose a pricing plan carefully based on your infrastructure requirements. This mistake can cost you an expensive cloud deployment.

- Never ignore the newer technologies, as they can reduce your cloud spending and helps in increasing productivity in work.

Closing Thought

The report is proof to know that AWS helps businesses in cost savings up to 80% over the equivalent on-premises options. It lowers costs and allows companies to use savings for innovation. So, what are you waiting for, plan to migrate your on-premise IT infrastructure to the AWS cloud, calculate costs by following the steps, and optimize it by preventing typical mistakes?

Managing your IT infrastructure’s overall direct and indirect costs requires time and process. TCO analysis for a cloud migration project is a daunting job. But, AWS TCO analysis makes this complex process easy. Take advantage of this analysis and determine your cloud migration project cost.